Are you ready to delve into the captivating world of AI-driven generative image models? In this blog post, we will unravel the groundbreaking research on adopting finetuned adapters to revolutionize customized image creation. Get ready to be mesmerized by the innovative Low-Rank Adaptation (LoRA) method and the cutting-edge Stylus system designed to automate adapter selection for enhanced image generation.

A Glimpse into the World of Finetuned Adapters

The realm of generative image models has witnessed a paradigm shift with the adoption of finetuned adapters. These adapters not only enable customized image creation but also minimize storage requirements, paving the way for the development of expansive open-source platforms. Imagine a world where over 100,000 adapters are at your disposal, allowing you to transcend traditional constraints and unleash your creativity in AI art.

Challenges and Innovations in Adapter Selection

Despite the performance advancements facilitated by finetuned adapters, a critical challenge persists in automatically selecting relevant adapters based on user-provided prompts. This task requires a novel approach, different from conventional retrieval-based systems used in text ranking. Imagine the complexities of converting adapters into lookup embeddings and navigating through low-quality documentation and limited training data to find the perfect match for each unique user prompt.

Introducing the Stylus System: A Game-Changer in Image Generation

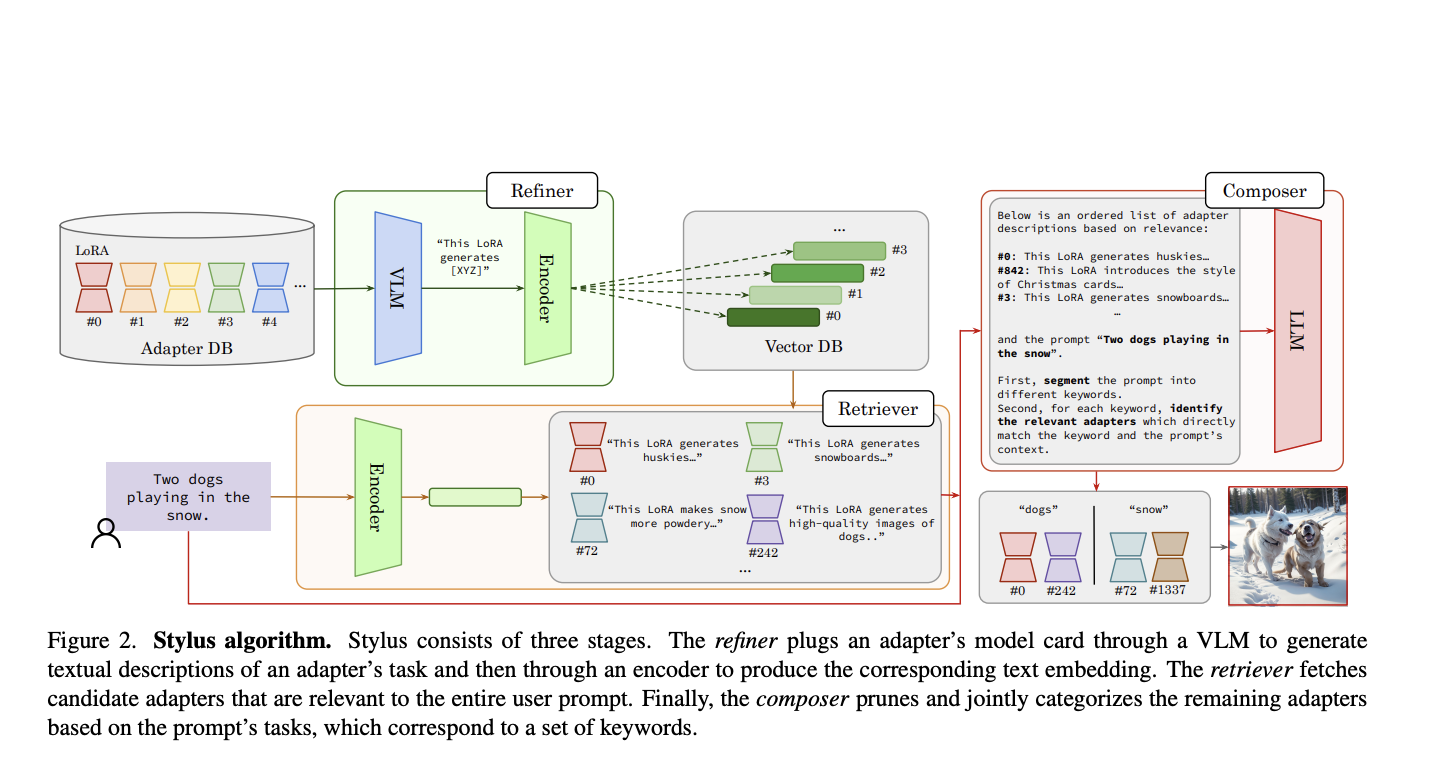

Enter Stylus, a revolutionary system developed by researchers from UC Berkeley and CMU MLD, designed to streamline adapter selection and enhance generative models for diverse, high-quality image production. Picture a three-stage framework where concise adapter descriptions are pre-computed as lookup embeddings, relevance is assessed against user prompts, and adapters are intelligently assigned to tasks for optimal image generation.

Unlocking the Potential of Stylus in Image Creation

By incorporating a binary mask mechanism to control the number of adapters per task, Stylus ensures image diversity and overcomes challenges associated with composing multiple adapters. The results speak for themselves, with Stylus outperforming popular Stable Diffusion (SD 1.5) checkpoints, garnering higher preference scores from human evaluators and vision-language models.

Conclusion: A New Era of Automated Image Generation

In conclusion, Stylus presents a practical solution for automating the selection and composition of adapters in generative image models. Its efficacy in enhancing visual fidelity, textual alignment, and image diversity sets a new standard in AI-driven art creation. Stay tuned to explore the endless possibilities of Stylus beyond image generation, potentially revolutionizing other image-to-image application domains.

Embark on this journey of discovery and innovation by exploring the full research paper and project details. Join us in embracing the future of AI-driven image creation with Stylus.